Difference between revisions of "Data Warehouse"

| Line 1: | Line 1: | ||

| − | + | HiveTool is open source/open notebook. The entire primary record is publicly available online as it is recorded. Storing, organizing and providing access to the data is challenging. Each hive sends in data every five minutes, 288 times a day, inserting over 100,000 rows a year into the Operational Database. One thousand hives would generate 100 million rows per year. | |

In addition to the measured data, there are external factors that need to be systematically and consistently documented. Metadata includes hive genetics, manipulations, mite treatments, etc. | In addition to the measured data, there are external factors that need to be systematically and consistently documented. Metadata includes hive genetics, manipulations, mite treatments, etc. | ||

| Line 19: | Line 19: | ||

*partitioned into yearly or seasonal periods | *partitioned into yearly or seasonal periods | ||

*transformed (manipulation changes filtered out) | *transformed (manipulation changes filtered out) | ||

| − | *summarized | + | *summarized (daily weight changes) |

*cataloged and | *cataloged and | ||

*made available for use by researchers for data mining, online analytical processing, research and decision support | *made available for use by researchers for data mining, online analytical processing, research and decision support | ||

[[File:Database_servers_1_2.jpg|thumb 640px|Data Warehouse]] | [[File:Database_servers_1_2.jpg|thumb 640px|Data Warehouse]] | ||

Revision as of 03:28, 10 May 2014

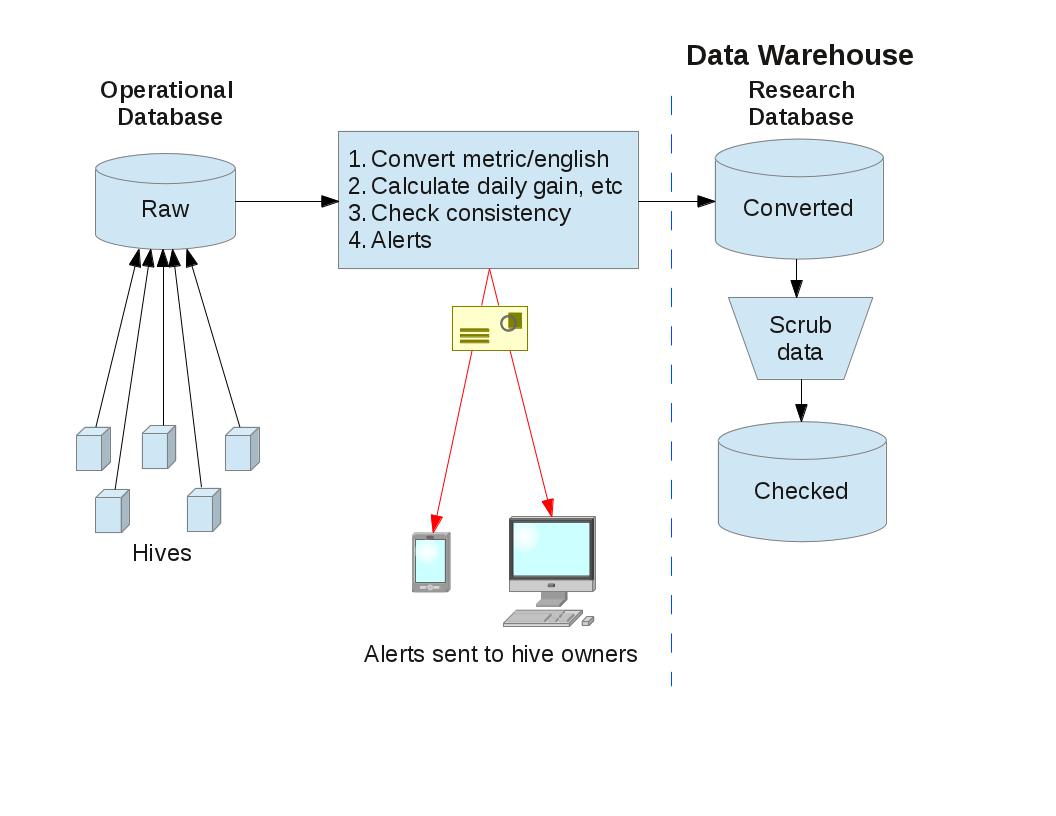

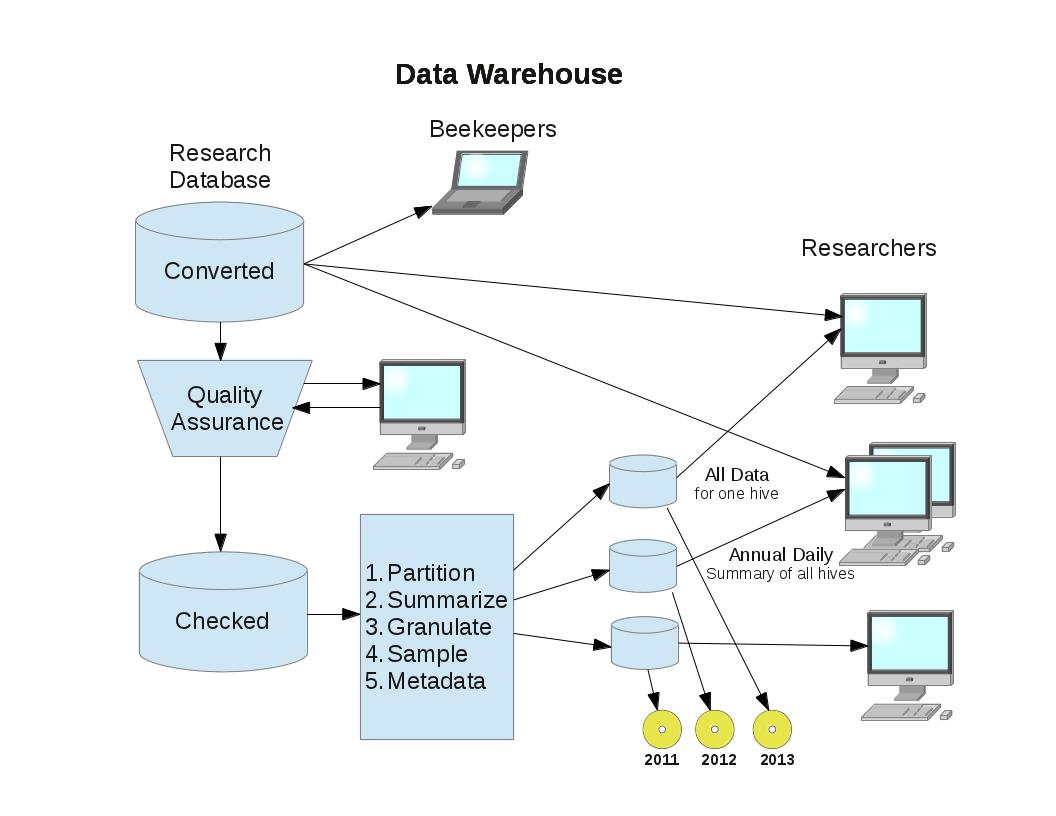

HiveTool is open source/open notebook. The entire primary record is publicly available online as it is recorded. Storing, organizing and providing access to the data is challenging. Each hive sends in data every five minutes, 288 times a day, inserting over 100,000 rows a year into the Operational Database. One thousand hives would generate 100 million rows per year.

In addition to the measured data, there are external factors that need to be systematically and consistently documented. Metadata includes hive genetics, manipulations, mite treatments, etc.

The procedures that move the data from the Operational Database to the Research Database should:

- Structure the data so that it makes sense to the researcher.

- Structure the data to optimize query performance, even for complex analytic queries, without impacting the operational systems.

- Make research and decision–support queries easier to write.

- Maintain data and conversion history.

- Improve data quality with consistent quality codes and descriptions, flagging and fixing bad data.

The data needs to be:

- cleaned up

- converted (lb <=> kg, Fahrenheit <=> Celsius)

- partitioned into yearly or seasonal periods

- transformed (manipulation changes filtered out)

- summarized (daily weight changes)

- cataloged and

- made available for use by researchers for data mining, online analytical processing, research and decision support